Statistics

Understanding the Normal Distribution Curve

Learn about the normal distribution curve, why it is important, the formula, characteristics, and more.

Sarah Thomas

Subject Matter Expert

Statistics

10.26.2022 • 10 min read

Subject Matter Expert

Learn what binomial distribution is in probability. Read a list of the criteria that must be present to apply the formula and learn how to calculate it.

In This Article

Heads you win, tails I win. Have you wondered about the likelihood of winning 7 out of 10 coin flips or even 10 out of 10? A binomial distribution can give you the answer!

A binomial distribution is a discrete probability distribution for a random variable , where is the number of successes you get from repeating a random experiment with just two possible outcomes. We designate one of these outcomes as a “success” and the other as a “failure.”

The binomial distribution gives you the probability of getting successes across trials.

The easiest example of binomial probability involves a coin flip. When you flip a coin, there are exactly two independent outcomes: heads or tails. A binomial probability distribution gives you the probability of getting number of heads across coin flips.

You can only use the binomial distribution under certain conditions:

The distribution is for a repeated experiment (or game) where each round or trial has just two outcomes. Typically, you designate one of these outcomes as the “desired outcome” or “success” and the other outcome as an “undesired outcome” or “failure.”

The outcomes of the experiment are mutually exclusive, meaning for each round of the experiment or game, you can only get one of the two outcomes. For example, each time you flip a coin, you either get heads or tails, but you cannot get both heads and tails from one flip.

Independent trials. Each trial (or round) of the experiment must be an independent event. This means that the outcome of one round of the experiment does not affect the outcome of any other.

The probabilities associated with each outcome are fixed across all trials of the experiment. No matter how many times you toss the coin, the probability of getting heads and the probability of getting tails should stay the same.

The type of experiment that fits these criteria is often called a Bernoulli trial or a binomial experiment.

Olanrewaju Michael Akande is a professor in the Department of Statistical Science at Duke University. He covers binomial distribution extensively in this lecture:

To construct a binomial distribution or to calculate binomial probabilities, you need to have the following three parameters:

- the fixed number of trials (i.e, the number of times you will run the experiment)

- the probability of a success (i.e., the probability of getting the desired outcome)

- the probability of failure

You will always have the probability of failure if you know the probability of success and vice versa. Since you are only dealing with two possible outcomes in a binomial experiment, the probability of failure is always equal to one minus the probability of success, and the probability of success is always equal to one minus the probability of failure. We always use the letter to refer to the probability of success.

This is why we usually write the probability of failure as . You might also see the probability of failure denoted by the letter .

A nice feature of the binomial distribution is that it is easy to calculate its mean, variance, and standard deviation. Here are the formulas for calculating the expectations for a binomial distribution.

The mean or expected value of is

The variance of is

The standard deviation of is

Here, again, you have number of trials, the probability of success , and the probability of failure .

Binomial distributions are closely related to Bernoulli experiments. A Bernoulli experiment (or Bernoulli trial) is the name given to the type of experiment that binomial distributions help describe. The name comes from the 17th-century Swiss mathematician Jacob Bernoulli, one of several famous mathematicians from the Bernoulli family.

A Bernoulli experiment is a random experiment with just two possible outcomes: “success” and “failure.” The outcomes of each Bernoulli trial (or round) must be independent of the others. The probability of success and failure must be fixed across all trials.

A coin flip, as you’ve already seen, is an example of a Bernoulli experiment. So is the act of shooting a free throw in basketball, so long as you assume a fixed free throw percentage across all shots. A Bernoulli experiment can also model something like the probability of giving birth.

Under some circumstances, it’s possible to convert experiments with over two outcomes into a Bernoulli experiment. You can do this by dividing all possible outcomes into two categories: success and failure.

As an example, think of a game of cards where you draw one card from a deck. There are 52 cards in a deck of playing cards, so technically, there are 52 outcomes for this experiment. If you want to convert the experiment into a Bernoulli experiment, you simply divide the 52 outcomes into two groups: “success” and “failure.” You might designate all black cards as “successes,” and all red cards as “failures,” or you might designate all spades as “successes” and all other suits as “failures.”

The probability mass function (PMF) of a binomial distribution (sometimes referred to as the binomial probability formula) takes the following form.

Where:

is the number of trials

is the probability of success

is the probability of failure

is the observed number of success

Note:

The notation indicates a binomial coefficient. . The symbol ! is the symbol for a factorial.

A probability mass function is a function that gives you the probability that a discrete random variable will equal some specific value , . Therefore, you can use the PMF of the binomial distribution to calculate the probability of getting a specific number of successes from number of trials.

The shape of a binomial distribution depends on its parameters: and . Here are some examples of what a binomial distribution can look like.

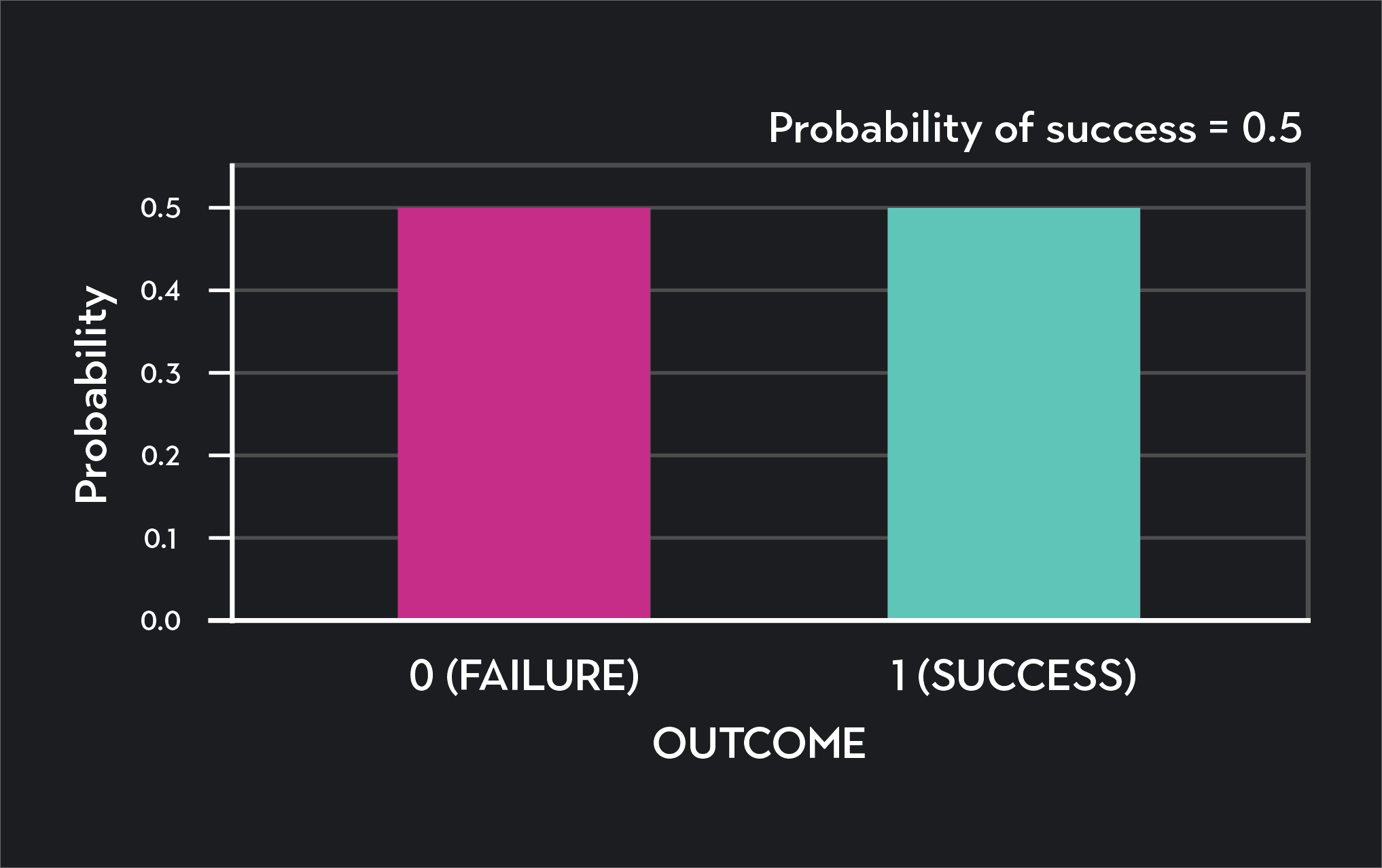

Let’s start with the easiest Bernoulli experiment—that of a coin flip where the probability of success (the coin landing heads) is 0.5, and the probability of failure (the coin landing tails) is 0.5. We’ll see that the distribution depends not just on these probabilities, but also on the number of trials .

The probability distribution for flipping a fair coin once (n=1) looks like this. This is the probability distribution for a single Bernoulli trial. Notice that there is an equal chance of success (heads) and failure (tails). Both outcomes occur with a 50% chance.

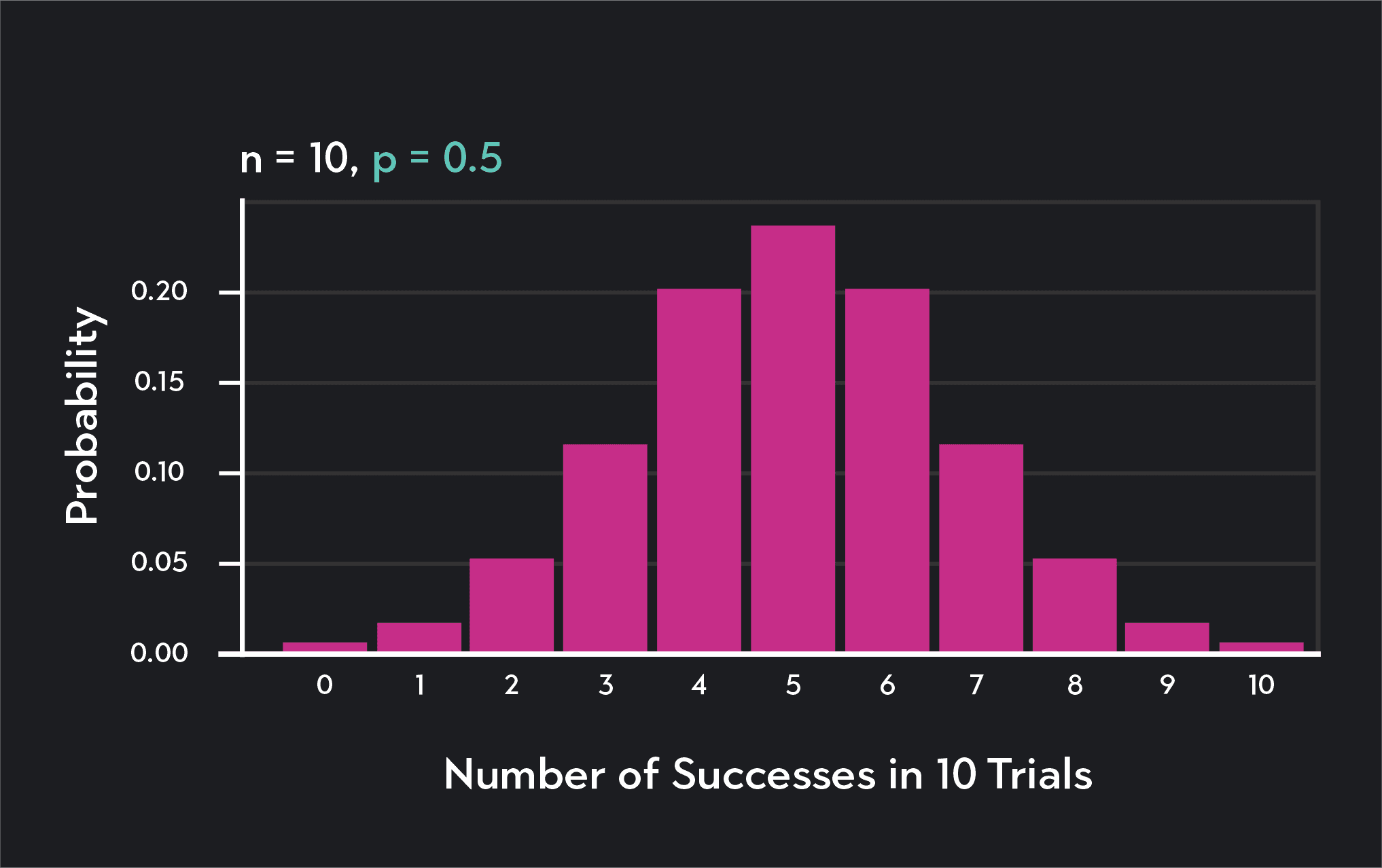

Now, here is what the binomial distribution looks like if we flip the coin ten times (n=10). Notice that the horizontal axis of the graph changes and that there are now ten different possible values for . You could have anything from 0 successes to ten successes across the ten trials.

This particular distribution tells you that after ten coin flips, the probability of getting zero heads (or zero successes) is less than 0.001 (or 0.1%). The probability of 1 success is approximately 0.01 (or 1%), and so on. The mean of this distribution is equal to , which is 10 x 0.5 = 5. This is the expected number of successes after ten flips.

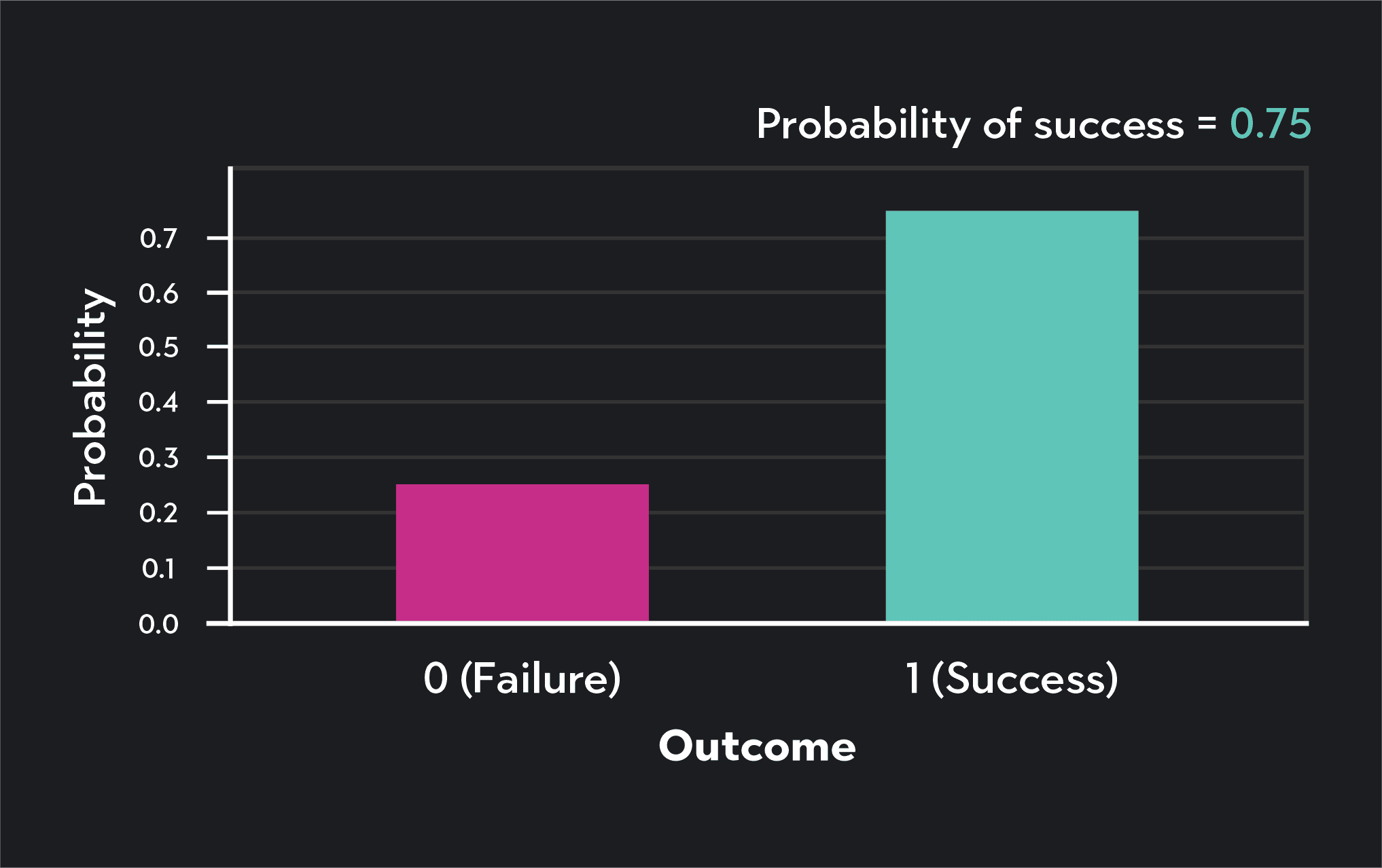

Similarly, here is the Bernoulli distribution of shooting a single free throw when your free throw percentage is 75% (p=0.75). This graph shows that on a single trial, you’ll make the shot with a probability of 0.75, and you will miss (i.e., fail) with a probability of 1-0.75 or 0.25.

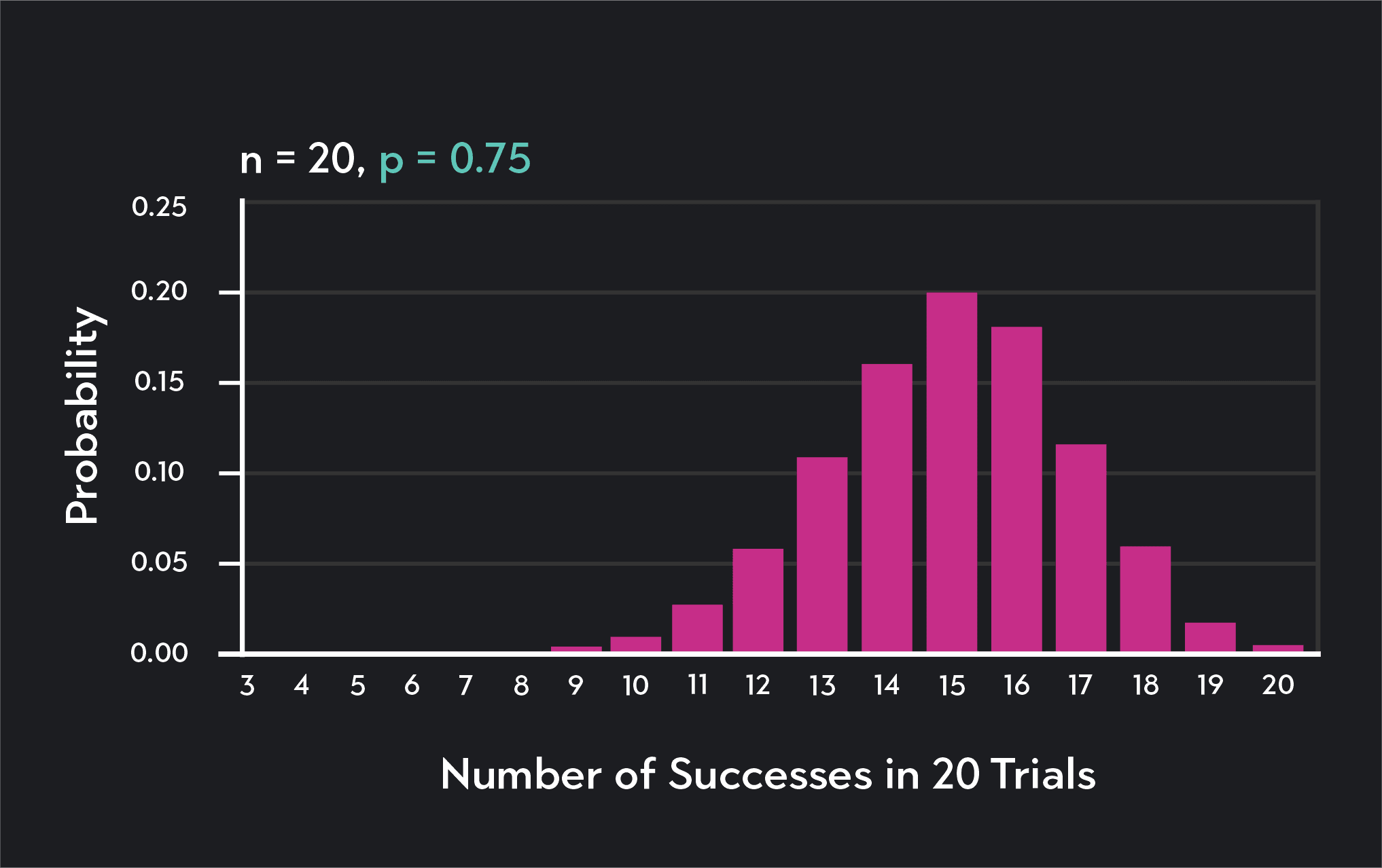

Below is the binomial distribution showing the probability of making number of free throws after 20 shots. We still assume the free throw percentage to be p = 0.75.

Notice that this distribution looks quite different from the binomial distribution for ten repeated coin flips. That’s because both parameters ( and ) have changed. The probability of success is now higher, so the distribution is left-skewed. If instead, was smaller than 0.5, the distribution would be right-skewed. The number of trials, , has increased, so the total number of successes along the horizontal axis has also increased.

This distribution shows that when your free throw percentage is 0.75, the probability of making less than half of your shots is fairly low. The chance of making exactly 16 out of 20 shots is about 19%. The mean of the distribution is np = 0.75x20 =15, which means you can expect to make 15 out of 20 shots on average.

You can use the binomial distribution to calculate numerous statistics and probabilities. Here are some more examples.

Assume you are playing a game where a fair coin is tossed 50 times. What is the probability of getting only 20 tails?

Here you can use the probability mass function to calculate the probability. All you need to do is plug in the relevant values for , , and . Where n=50 trials, p = 0.50, and x = 20 successes.

What is the standard deviation for a binomial distribution representing a fair coin that’s tossed 25 times?

To answer this question, you need to plug the values of and into the equation for the standard deviation of a binomial distribution.

A WNBA player has an 85% free throw percentage. If the player is expecting to shoot 250 free throws in a season, how many should she expect to make?

This question asks for the expected value or mean of the binomial distribution. We know the mean is equal , so we simply plug the relevant values of and into the equation to get the answer.

This next example requires converting an experiment with more than two outcomes into a Bernoulli experiment.

A roulette wheel has 38 pockets with different numbers and two different colors (red and black). Although there are 38 unique outcomes when you spin a roulette wheel, as someone betting on where the ball will land, the outcomes you care about are simply “success” or “failure,” win or lose.

Suppose you want to bet that a roulette ball will land on a low number (1-18). Using a binomial distribution, what is the probability of winning exactly 12 out of 20 times?

The roulette wheel has 38 pockets, and you are betting on the chances of the ball landing in one of 18 pockets. Your probability of success on each trial is =18/38 = 0.4737. Your probability of failure is (1-p) = 0.5263. You are spinning the wheel 20 times, so = 20. Now that you have the parameters you need, simply plug them into the probability mass function to find the answer.

To calculate a binomial probability using R, you can use the following commands.

To find an exact probability, , use the dbinom command.

For example, what is the probability of making 20 out of 30 free throws with a 60% free throw percentage?

> dbinom(20, size=30, prob=0.6)

[1] 0.115

To find the probability that is less than or equal to some value, , use the pbinom command. This command gives you cumulative probabilities.

For example, what is the probability of making 20 or fewer shots out of 30 free throws, assuming your free throw percentage is 60%? P(X≤20)

> pbinom(20, size=30, prob=0.60)

[1] 0.824

To find the probability that is greater than some value, , use the pbinom command. Since this function gives cumulative probabilities to the left of some value, you need to subtract the probability that from 1 in order to find the probability you are looking for.

For example, what is the probability of making at least 20 out of 30 free throws when your free throw percentage is 60%?

>1- pbinom(20, size=30, prob=0.60)

[1] 0.176

You now have the edge over most people in betting on coin flips and calculating free throw percentages. Statistics is filled with useful probability distributions.

Outlier (from the co-founder of MasterClass) has brought together some of the world's best instructors, game designers, and filmmakers to create the future of online college.

Check out these related courses:

Statistics

Learn about the normal distribution curve, why it is important, the formula, characteristics, and more.

Subject Matter Expert

Statistics

Sampling distribution is a key tool in the process of drawing inferences from statistical data sets. Here, we'll take you through how sampling distributions work and explore some common types.

Subject Matter Expert

Statistics

This article explains what subsets are in statistics and why they are important. You’ll learn about different types of subsets with formulas and examples for each.

Subject Matter Expert